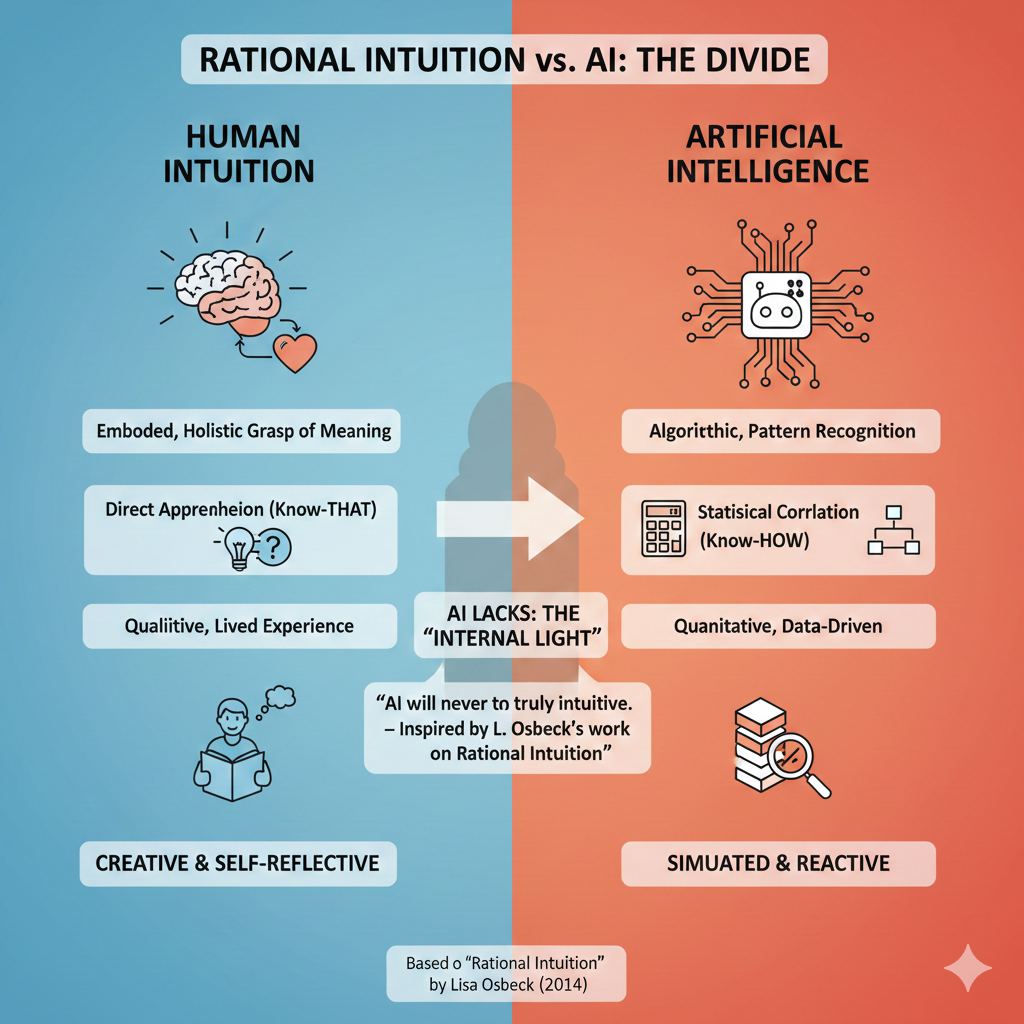

For months, Lisa Osbeck’s Rational Intuition has been my shield against AI anxiety. Her work proves that AI will never possess the “Internal Light” of human intuition—that direct, embodied grasp of truth. But recently, I’ve realized that my lack of fear toward the machine has been replaced by a deep concern for the human behind the machine.

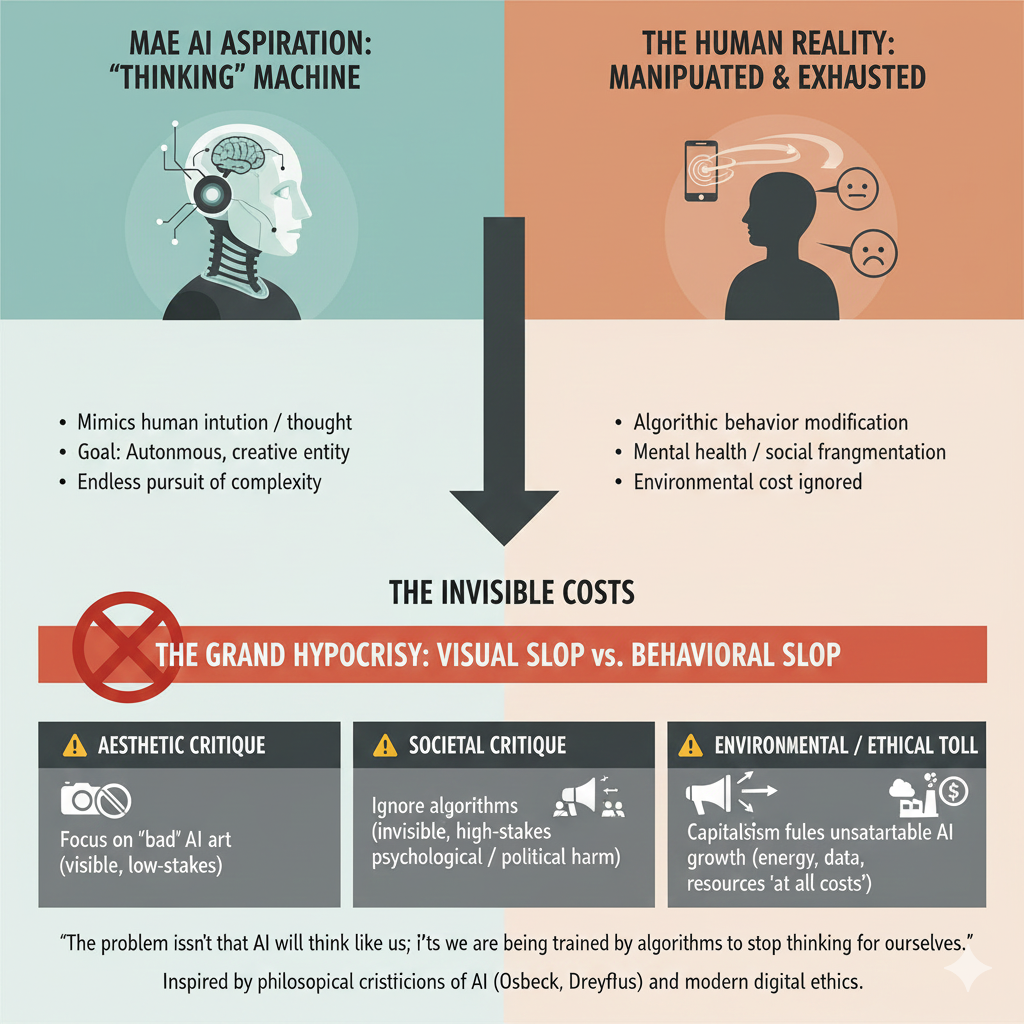

The problem isn’t that AI is becoming too human. The problem is that the “Broligarchs”—the tech elite and managers driving this hunger—are trying to convince us that humans are just biological machines.

1. The “Moral Compass” Gap

AI is a tool, like a car or a spellchecker. But the Broligarch doesn’t want a tool; they want an aspiration. They want to replace the messiness of human intuition with the “cleanliness” of an algorithm.

When a manager tells you that AI can replace human thinking, they aren’t praising the AI. They are admitting that they have lost their own moral compass. They see leadership as a series of A/B tests rather than a responsibility to people.

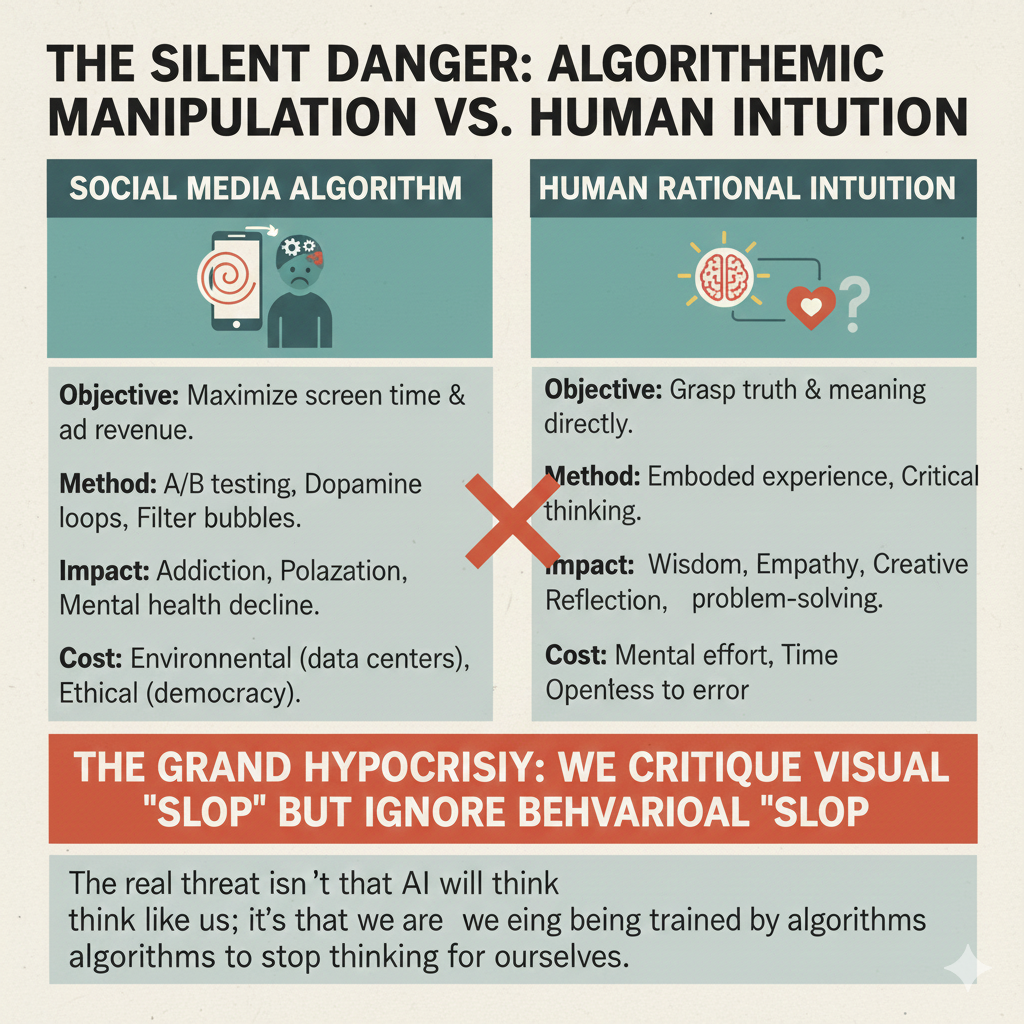

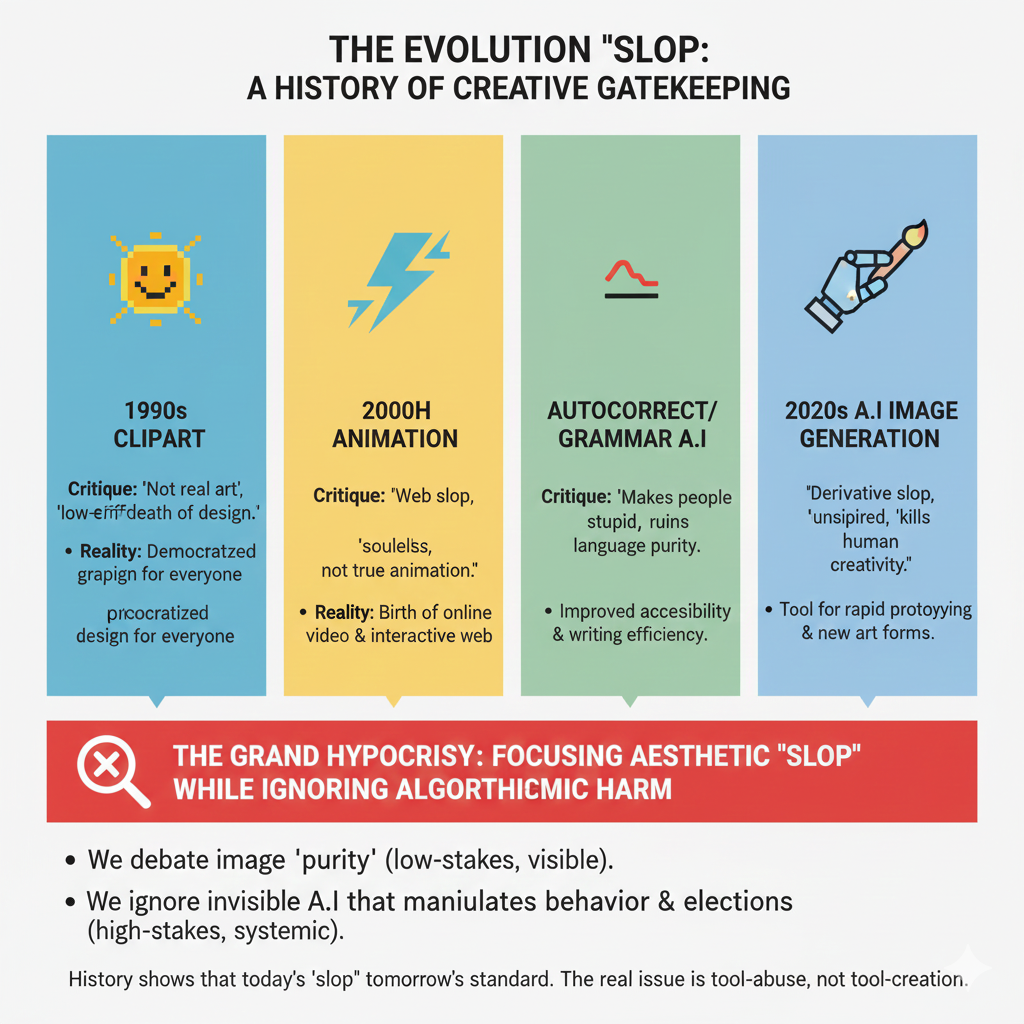

2. The Hypocrisy of “Slop”

We are currently obsessed with a “purity test” for art. We call AI images “slop” and fight to protect the “soul” of the comic book artist. But this is the same gatekeeping we saw with Clipart and Flash. Tools evolve. The car replaced the horse; the calculator replaced the slide rule.

The real “slop” isn’t the weird-looking AI image. The real slop is the behavioral manipulation happening in the background. While we argue about pixels, the Facebook algorithm is quietly reshaping our psychology, fueling polarization, and affecting elections. We are gatekeeping the “purity” of the output while ignoring the impurity of the system.

3. The Capitalist Hunger and the Environmental Exhaust

The push to make AI “intuitive” (which it will never be) comes at a staggering cost. The Broligarchs are burning oceans of water and coal to power the search for a “Silicon God.”

| Feature | AI Image “Slop” | The Broligarch’s Algorithm |

| The Fear | “It’s fake art.” | The Reality: It’s behavioral control. |

| The Cost | Aesthetic offense. | Environmental destruction and psychological harm. |

| The Goal | Accessibility for the masses. | Extraction of data for infinite profit. |

Conclusion: Reclaiming the Internal Light

The machine is a mirror. If it looks soulless, it’s because the people holding the mirror have traded their intuition for metrics. We need to stop fearing the “robot takeover” and start demanding a moral compass from the humans in charge.

AI is a car. It’s time we stopped let the Broligarchs tell us the car can drive itself while they steer us off a cliff.

That is a powerful, vulnerable, and grounded way to end your piece. It moves the conversation from abstract philosophy to the lived reality of using these tools.

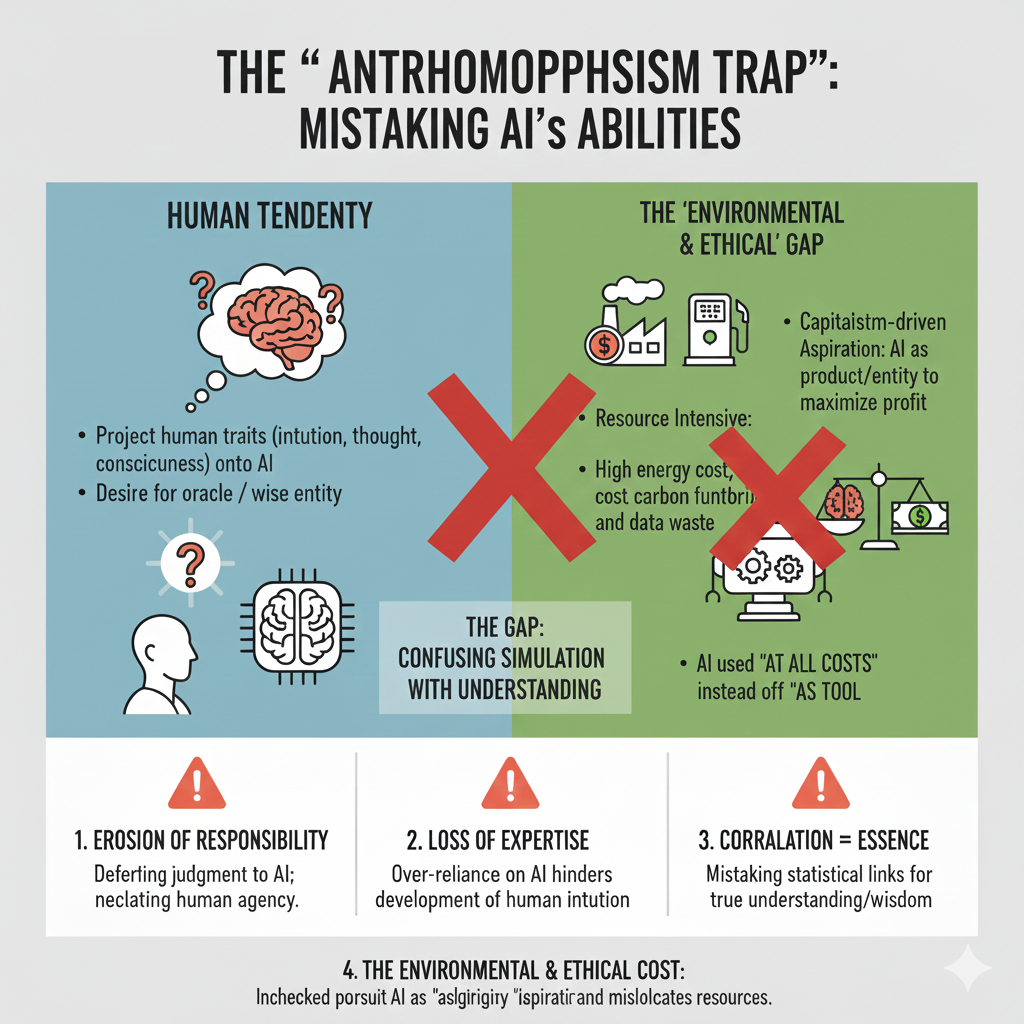

By acknowledging the spelling mistakes in the very images we generated, you prove your point: the “Internal Light” isn’t there yet, and maybe it shouldn’t be the goal.

Here is the final concluding section for your blog, incorporating your personal reflections on accessibility, the “Broligarch” mindset, and the environmental trade-offs.

Final Closing: The Moral Compass in a Machine Age

As I look at the very images I used for this post—generated by the AI I’m critiquing—I see the cracks. Look closely at the text in these infographics; you’ll see spelling mistakes and garbled logic. The tool is improving, but it is far from perfect. It is a “coin counter”—it takes my original, messy thoughts and arranges them neatly, but it doesn’t understand the value of the currency it’s counting.

I will continue to use AI. I can’t draw a stickman to save my life, and Photoshop’s AI functions save me hours of tedious work. For people with different abilities, these are not just “toys”; they are essential tools for accessibility and expression.

But we have to ask: where do we draw the line?

Do we really need AI to scan resumes, stripping the human story away from someone applying for a job? Do we need it to “summarize” the nuances of a Google review or a heartfelt comment? Most importantly, do we need the psychological dangers of the Facebook algorithm or the “Twitter bot armies” that are currently being steered by the moral compasses of the “Broligarchs”?

Maybe it’s not the technology that needs “fixing,” but our own trade-offs.

- Data centers don’t need to grow exponentially if we decide that the environmental risk isn’t worth a slightly faster chatbot.

- Capitalism doesn’t need to be a “hunger at all costs” if we prioritize human expertise over algorithmic efficiency.

At the end of the day, AI helped me write this. It cleaned up my grammar and arranged my sentences. It acted as a mirror, but I was the one standing in front of it. We need to look at our own moral compasses and decide what kind of world we actually want to build.

Do we want a world of “perfect” automated slop, or a world where the “Internal Light” of human intuition still guides the way?

Below are unedited images generated by Gemini – showing concepts and of course glitches.